Spring School

Spring School on Machine Learning and Perception for Social Awareness

13-16 April 2026

Location: Catania, Italy

About the SWEET Spring School

The SWEET Spring School is a specialized training initiative organized entirely by the SWEET project consortium, though it remains open to external students and researchers. The SWEET Spring School is a specialized training initiative organized entirely by the SWEET project consortium, in conjunction with the TRAIL conference, and it remains open to external students and researchers. The core objective of the school is to deeply explore disciplines related to Social Awareness from the perspective of perception in Service Robotics.

The curriculum is designed to equip participants with a robust set of skills through a series of lectures and hands-on practical sessions. The focus will be on cutting-edge research methods for sensing and the development of machine learning algorithms essential for enhancing robots’ social perception capabilities.

Participation in the school is free of charge.

However, please note that all logistical costs, including travel and accommodation expenses, must be covered by the individual participants.

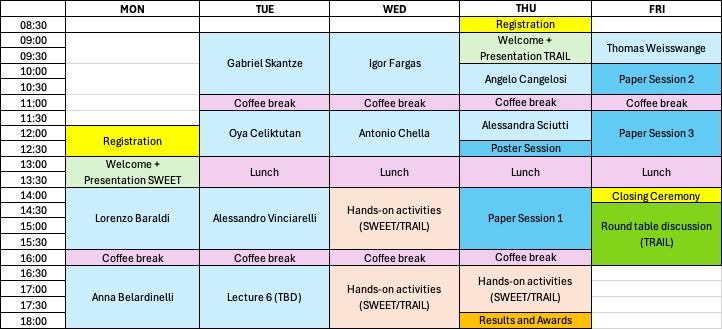

Progamme (TBC)

Speakers

Prof. Alessandro Vinciarelli

Full Professor at University of Glasgow

An Introduction to Social AI

The presentation introduces Social AI, the domain aimed at modelling, analysis and synthesis of human-human and human-machine interactions. After outlining the scope of the field (what Social AI is about and what are its most important scientific objectives), the presentation will cover its most distinctive aspects. Special attention will be paid to the interdisciplinary collaboration with Psychology and other Human Sciences because Social AI is about people as much as it is about machines. Finally, a few examples will provide a chance to develop familiarity with approaches, methodologies and research problems common to most Social AI works.

Biografy

Alessandro Vinciarelli (http://vinciarelli.net) is Full Professor at the University of Glasgow, where he is with the School of Computing Science and the Institute of Neuroscience and Psychology. His main research interest is Social AI, the domain aimed at modelling, analysis and synthesis of verbal and nonverbal behaviour in human-human and human-machine interactions. He published 200+ works in international journals and conferences and he is or has been Principal Investigator in 15+ projects, including an EU funded European Network of Excellence (the SSPNet, 2009-2014, 6.2MEuros) and the UKRI Centre for Doctoral Training in Socially Intelligent Artificial Agents (2019-2027, 6MEuros, http://socialcdt.org). Alessandro co-organized 30+ international events as General Chair (IEEE International Conference on Social Computing 2012, ACM International Conference on Multimodal Interaction 2017, International Conference on Digital mental Health and Wellbeing 2026, etc.), Program Chair (ACM International Conference on Multimodal Interaction 2023, Affective Computing and Intelligent Interaction 2024) or other roles. In addition, Alessandro is co-founder of Klewel (http://klewel.com), a knowledge management company recognised by the IEEE as an exemplary impact story, and scientific advisor to Substrata (http://substrata.me), a leading Social Signal Processing company.

Prof. Ing. Igor Farkaš

Full Professor at Comenius Univeristy Bratislava

Machine learning for robot perception and control

Machine learning (ML) is a fundamental bio-inspired approach towards building AI systems optimized for various tasks, including robot learning. In the talk, we will present several ML methods based on artificial neural networks, useful for this domain. We will cover two paradigms: first, the supervised learning that can be exploited for building world models (latent representations) important for robots in order to represent the world. Second, the reinforcement learning paradigm used for robots in order to learn desired behavior (policy).

1. Self-Supervised Learning (SSL) for Robot Perception and Representation Methods that allow robots to learn from raw sensory data without manual labeling.

(a) Representation Learning: Contrastive learning = Learn state embeddings by distinguishing positive (e.g., time-adjacent frames) from negative samples.

(b) Predictive modeling (forward models, inverse models), Multimodal alignment.

2. Reinforcement Learning (RL) for Robot Control and Decision-Making = Methods that optimize behavior through trial and error.

(a) Model-Free RL – Value-based (e.g., DQN), Policy gradient (e.g., PPO, TRPO, A2C), Actor–critic (e.g., SAC, TD3), common for continuous-control robotic tasks (grasping, locomotion).

(b) Model-Based RL – Learned dynamics models (e.g., Dreamer) = Use predicted future trajectories for planning or policy learning. Hybrid planning + learning (combine model-based planning (MPC) with learned controllers).

Biografy

Igor Farkaš is a professor of informatics at the Department of Applied Informatics, Faculty of Mathematics, Physics and Informatics at Comenius University Bratislava (UKBA). He obtained master’s degree in technical cybernetics (1991) and PhD in applied informatics (1995), both from Slovak University of Technology in Bratislava. He received a prestigious Fulbright scholarship (University of Texas at Austin, US) and Humboldt scholarship (Saarland University in Saarbrucken, Germany). His research interests span the related fields of artificial intelligence and cognitive science. More concretely, the areas include artificial neural networks and their analysis (explainable AI), cognitive robotics, language modeling, reinforcement learning (intrinsic motivation), abstract cognition, and more recently, human-robot interaction. Prof. Farkaš teaches courses on neural networks, computational intelligence, deep learning and cognition, and until recently grounded cognition. He coordinates the Centre for cognitive science at UKBA and serves as the main guarantor of the international Middle-European Interdiciplinary master’s programme in cognitive science (MEi:CogSci). More on http://cogsci.fmph.unibs.sk/~farkas.

Prof. Lorenzo Baraldi

Associate Professor at University of Modena and Reggio Emilia

Towards Reliable and Adaptive Multimodal LLM Agents

The rapid progress of multimodal Large Language Models (MLLMs) is reshaping how intelligent agents perceive, reason, and act within visually rich and interactive environments. As these systems advance, new challenges emerge in achieving coherent multimodal understanding, robust generalization, and transparent decision-making, also in embodied scenarios where perception and action tightly interplay.

In this talk, I will present research directions that strengthen visual grounding, reduce hallucinations, and improve reasoning in knowledge-intensive contexts. I will discuss how addressing missing or imperfect modalities leads to more resilient multimodal integration, how reflective mechanisms can enhance deliberation, and how structured representations (ranging from recurrent visual-linguistic processing to non-Euclidean embedding spaces) enable richer alignment between observations, instructions, and actions.

Further, the talk explores how agent behavior can be better aligned with user intent in interactive and embodied settings through personalization and improved modeling of contextual cues.

Biografy

Lorenzo Baraldi is an Associate Professor at the University of Modena and Reggio Emilia, where he works on Deep Learning, Vision-and-Language integration, Large-Scale models and Multimedia. He teaches in the courses of “Computer Vision and Cognitive Systems,” Scalable AI, and Computer Architecture. He has authored more than 120 publications in international journals and conferences. Currently, he serves as an Associate Editor for Computer Vision and Image Understanding and Pattern Recognition and acts as an Area Chair for ICCV and major multimedia conferences. He is also a Scholar in the ELLIS society (European Laboratory for Learning and Intelligent Systems), where he coordinates the Modena ELLIS Unit. Since 2021, He has held the position of deputy director at the Interdepartmental Center on Digital Humanities at the University of Modena and Reggio Emilia.

Prof. Gabriel Skantze

Full Professor at KTH Royal Institute of Techonology

Conversational human-robot interaction

Conversational AI has evolved rapidly in recent years, driven largely by advancements in LLMs. However, significant challenges remain, particularly in spoken human-robot interaction. In this domain, the physical context is critical, interactions often involve multiple users, and the robot’s physical embodiment plays a key role. This talk will address specific challenges related to turn-taking, feedback, and conversational speech synthesis.

Biografy

Gabriel Skantze is a Professor at KTH Royal Institute of Technology in Stockholm, Sweden, where he leads several research projects related to speech communication, conversational AI, and human-robot interaction. His research is highly interdisciplinary, encompassing topics such as computational modelling of turn-taking, feedback and gaze in interaction, language learning, and language grounding. He is the former President of SIGDIAL, the ACL special interest group on Discourse and Dialogue. He is also co-founder and Chief Scientist of the company Furhat Robotics.

Prof. Oya Celiktutan

Associate Professor at King’s College London

Abstract

….

Biografy

….

Prof. Antonio Chella

Full Professor at University of Palermo

Engineering Self-Conscious Robots: Cognitive Architectures and Mechanisms

This lecture addresses robot self-consciousness from the perspectives of computational and cognitive architectures, focusing on mechanisms that enable an artificial agent to represent, monitor, and regulate its own internal states and actions. Building on foundational theories of consciousness and self-awareness, the lecture examines how self-consciousness can be operationalized in robots through internal self-models, predictive processing, and global workspace–like control structures. Particular emphasis is placed on the role of embodiment and inner speech as recursive feedback mechanisms that support self-monitoring, decision-making, and behavioral coherence. Concrete robotic architectures and experimental case studies are discussed to illustrate how minimal forms of self-consciousness can emerge from the integration of perception, action, attention, and internal simulation. The lecture also reviews current approaches to evaluating robotic self-consciousness and highlights open theoretical, empirical, and ethical challenges in the design of self-aware artificial agents.

Biografy

Antonio Chella is Professor of Robotics at the University of Palermo, where he founded and directs the RoboticsLab. His research focuses on cognitive architectures, artificial consciousness, self-awareness in robots, and socially assistive robotics. He has been actively involved in interdisciplinary research at the intersection of AI, cognitive science, and philosophy of mind. He has coordinated or participated in several national and international research projects. He is the author of numerous scientific publications on machine consciousness, inner speech, and self-aware robotic systems.

Dr. Anna Belardinelli

Principal Scientist at Honda Research Institute

“Do you get me?”- Multimodal communication for situated human-robot interaction

Intelligible, meaningful, and grounded communication is a fundamental requirement for successful human–robot interaction. However, such communication cannot assume symmetric cognitive and expressive capabilities between humans and robots. Acknowledging this asymmetry, interaction design must focus on establishing common ground and fostering mutual understanding. Robots should therefore communicate their perceptions, reasoning processes, and action capabilities in an intuitive and explainable manner, potentially using modalities that differ from those employed by humans. Conversely, robots must be able to interpret human intentions by integrating verbal and non-verbal cues within their situational context. In this talk, these principles are illustrated through theoretical and experimental insights from cognitive science, alongside our own implementations and empirical studies of human–robot interaction in socio-physical settings. I’ll present work on AR-based learning from demonstration, attentive support in multiparty interactions, and the integration of language with complementary expressive cues.

Biografy

Anna Belardinelli is Principal Scientist at the Honda Research Institute Europe, working on human-robot interaction. She has an interdisciplinary background, with interests at the intersection of Artificial Intelligence and Cognitive Science. Her research efforts have spanned visual attention in humans and machines, eye-hand coordination for manipulation and teleoperation, and computational models that support intelligent behavior in robots and interactive systems.

How to Apply

To apply for the Spring School, please submit the following documents:

- A short Statement of Interest (max 1 page).

- Your current Curriculum Vitae (CV).

- A Letter of Support from your Tutor/Supervisor.

All application materials must be sent via email to: infosweet@unina.it

APPLICATION DEADLINE: February 28th, 2026

Acceptance Notification: March 15th, 2026

Don’t Miss the Final Conference!

Visit the TRAIL Conference PageTRAIL Conference Keynote Speakers

In conjunction with the Spring School, the TRAIL conference will feature the presence and contribution of the following speakers:

| Name | Affiliation |

|---|---|

| Prof. Angelo Cangelosi | University of Manchester |

| Dr. Alessandra Sciutti | Italian Institute of Technology (IIT) |

| Dr. Thomas Weisswange | Honda Research Institute |